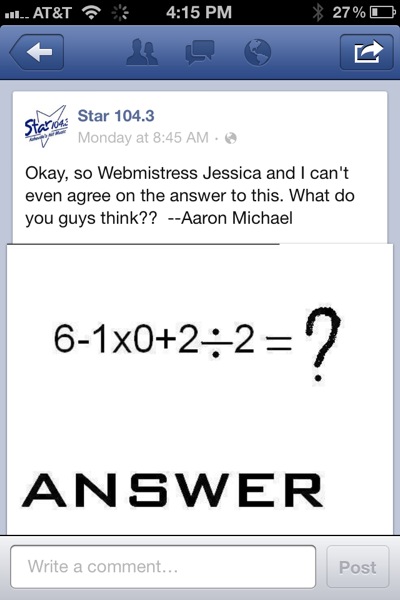

I've been thinking a lot lately about times when math teachers force students to do things without technology, or "by-hand" and then later (or perhaps never) show them later how they can do the procedure faster or more efficiently with technology.

To illustrate my first point, I have to tell you what happened with me. And, as will be no surprise to anyone, this example centers around AP Stats.

When I teach confidence intervals and hypothesis tests, I follow the same route that most AP Stat teachers follow. First students find the interval and the p-value by-hand. I don't show them that their calculator has a button for each of these functions that will do all the work for them. I do this, I tell myself, because it will help them remember everything that the calculator is doing in the background when they press these buttons.

But here's my dilemma. About six weeks later, I challenge them to do the procedure, by-hand. I give them limited information so that they have to reconstruct the p-value in a different way then they have for most of the unit--eliminating the possibility that they can use the faster method. And guess what? They don't remember. Not a clue. So I spent two weeks restricting their use so that they remember/understand certain concepts (because I am convinced that the faster way eliminates the understanding of those concepts) but when it comes time to show me that those two weeks of by-hand work actually paid off? Goose egg!

Now before I provide my suggested solution, let me talk about another situation: finding the standard deviation.

Here I differ from most of my colleagues. Most teachers will tell you something like this:

"Well, I know we can find standard deviation on our calculators, but I make them figure out 1 or 2 by hand, that way they have a feel for what's really going on."

I think this is so much baloney. Here's my issue. When it comes to writing a test with my colleagues, they never offer me an assessment question that they believe will really measure if the students really "got the feeling". In fact, when the test is said and done, I didn't have my colleagues who taught this way saying "See! Here is the question that my kids got right and your kids didn't. That's because my kids calculated the standard deviation by-hand!" I have yet to see a problem where students seem to benefit from 20 minutes of 1930's by-hand work. If anything, it just makes them hate your class a little bit.

[tangent: Many of you teach multiple periods of stats. Run an experiment. Have 1 period just use their calculator. Have the other spend 20 minutes calculating standard deviation by hand. Then look at your tests and see if there's a difference!]

In the end, both of these scenarios boil down to writing high quality assessment items. In the case of the standard deviation, I have never observed that calculating it by hand helps students successfully describe the standard deviation as the typical distance of the data from the mean. But if you're going to really assess your students' understanding and not just their button-pushing skills, you'd better write an open-ended question that assesses precisely this concept. (AP Stats, 2007 #1 is a nice starting point.) When your goal is for students to be able to meaningful interpret this value, I'm betting you'll change how you spend your class time. And by-hand calculation probably won't be taking up that time.

With p-values, I definitely need to up my game on my assessments. I need to write assessment items that test my students abilities to find a p-values without being able to press a test button on their calculator. This is a skill I value. There is TOO much magic happening with the technology. But instead of unrealistically forcing a by-hand calculation by hiding calculator functionality, I need to write creative test questions that require these kinds of skills. These questions aren't actually hard to write, I just haven't done so (simple example: provide students with a test statistic and they hypotheses and ask for the conclusion). Part of what I'm learning (ever so slowly, it seems) is that when students are failing to produce the kind of understanding I'm hoping for, I need to figure out both formative and summative ways to asses that concept more frequently. Because if I'm not forcing them to answer questions about a concept, they letting it slide out of their mind and replacing the space with SnapChats!

One final, and much more general observation. The best math teachers are always working towards understanding. They will argue with their colleagues about activities and manipulatives that help develop understanding in our students, but that take some extra time. But sometimes (not always) the assessments do not actually measure the understanding that we spent two extra days working towards. So I finish with this challenge: The next time you say to yourself

"I'm spending an extra day on this topic so that students really understand it!"

make sure you have an assessment item ready to see if that time paid off.